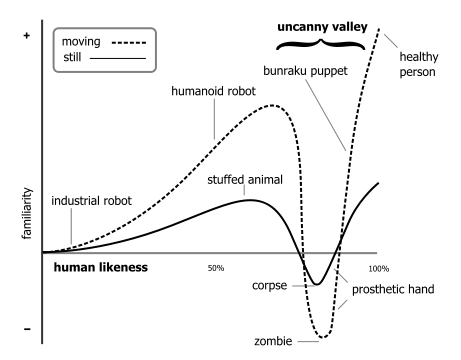

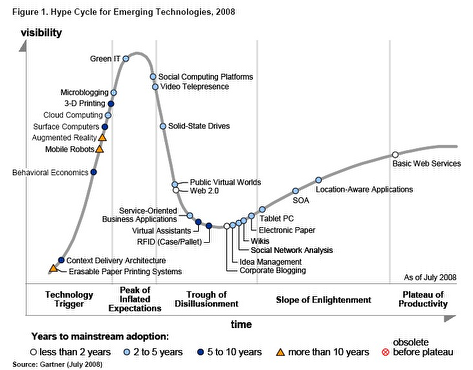

The Metaverse Roadmap Overview, an exploration of imminent 3D technologies, posited a number of different scenarios of what a future "metaverse" could look like. The four scenarios -- augmented reality, life-logging, virtual worlds, and mirror worlds -- each offered a different manifestation of an immersive 3D world. Of the four, I suspect that augmented reality is most likely to be widespread soon; moreover, when it hits, it's going to have a surprisingly big impact. Not just in terms of "making the invisible visible" -- showing us flows and information that we otherwise wouldn't recognize -- but also in terms of the opposite: making the visible invisible.

Augmented reality (AR) can be thought of as a combination of widely-accessible sensors (including cameras), lightweight computing technologies, and near-ubiquitous high-speed wireless networks -- a combination that's well-underway -- along with a sophisticated form of visualization that layers information over the physical world. The common vision of AR technology includes some kind of wearable display, although that technology isn't as far along as the other components. For that reason, at the outset, the most common interface for AR will likely be a handheld device, probably something evolved from a mobile phone. Imagine holding up an iPhone-like device, scanning what's around you, seeing various pop-up items and data links on your screen.

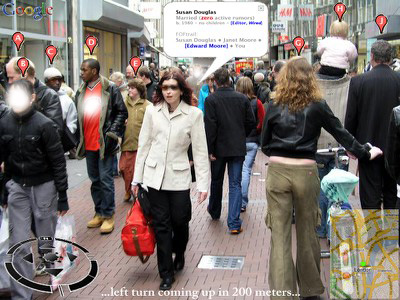

That's something like what an early AR system might look like (click on the image for much larger version).

I have what I think is a healthy, albeit a bit perverse, response when I think about new technologies: I wonder how they can be used in ways that the designers never intended. Such uses may be beneficial (think of them as "off-label" uses), while others will be malign. William Gibson's classic line that "the street finds its own uses for things" captures the ambiguity of this question.

The "maker society" argument that has so swept up many in the free/open source world is a positive manifestation of the notion that you don't have to be limited to what the manufacturer says are the uses of a given product. A philosophy that "you only own something if you can open it up" pervades this world. There's certainly much that appeals about this philosophy, and it's clear that hackability can serve as a catalyst for innovation.

You're probably a bit more familiar with a basic example of the negative manifestation: spam and malware.

(continued after the jump, with lots more images)

The Internet, email, the web, and the various digital delights we've brought into our lives were not designed with advertising or viruses in mind. It turned out, however, that the digital infrastructure was a lush environment for such developments. Moreover, the most effective steps we could take to put a lid on spam and malware would also undermine the freedom and innovative potential of the Internet. The more top-down control there is in the digital world, the less of a chance spam and malware have to proliferate, but the less of a chance there is to do disruptive, creative things with the technology. The Apple iPhone application store offers a clear example of this: the vetting and remote-disable process Apple uses may make harmful applications less likely to appear, but also eliminates the availability of applications that do things outside of what the iPhone designers intended. (Fortunately, the iPhone isn't the only interesting digital tool around.)

It seems likely to me that an augmented reality world that really takes off will out of necessity be one that offers freedom of use closer to that of the Internet than of the iPhone. Top-down control technologies will certainly make a play for the space, but simply won't be the kind of global catalyst for innovation that an open augmented reality web would be. An AR world dominated by closed, controlled systems will be safe, but have a limited impact.

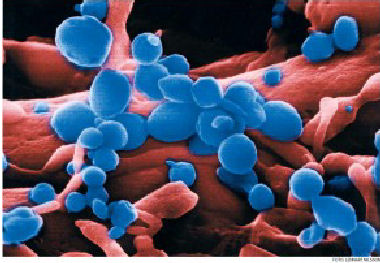

This means, therefore, that we should expect to see spam and malware finding its way into the AR world soon after it emerges. Of the two, malware is more of a danger, but also more likely to be controllable by good system design (just as modern operating systems are more resistant to malware than the OSes of a decade ago). Spam, conversely, is unlikely to be stopped at its source; instead, we'll probably use the same reasonably-functional solution we use now: Filtering. Recipient-side filtering has become quite good, and users with well-trained spam filters see just a tiny fraction of their incoming junk email. Spam is by no means a solved problem, but it's become something akin to a chronic, controllable disease.

It turns out, though, that the development of filtering systems for augmented awareness technologies would offer startling opportunities to construct our own visions of reality.

As an AR user, I would want to avoid seeing pop-up labels or data-limnals advertising products or services I wasn't explicitly looking for. Take the hand-held AR image above -- if you look closely, you'll see that there's a pop-up advertisement visible. Is this spam? Or just a regular ad? If we define spam as an "unwanted commercial message," it's definitely that, even if there's no attempt to hide where it comes from.

Spam or ad, it's just the sort of thing I'd want suppressed. But what if, besides being annoyed by the digital ad, I wanted to get rid of the physical world ads, too? Wouldn't it be nice to block any kind of unwanted commercial message? Not much of a point in doing so with a hand-held device, of course; but if we are moving to a world of wearable augmented reality displays (as glasses, perhaps, or as Vernor Vinge describes in Raibows End, as contact lenses), then something that would let me block images I didn't like might become more useful.

As long as the AR device was a passive artifact, only responding to messages sent to it by local info-tags, it's limited as to what it can block. But most discussions of augmented reality embrace the notion that the AR systems will have cameras to be able to observe the world around you, and to "notice" things that you'd find interesting. Connected to the net and various data-sources, such a system would be able to tell you quite a bit about what -- and who -- you're looking at. Here's an image I've used for awhile, showing an active AR system with a reputation manager application:

So let's combine these ideas.

The camera-enabled augmented reality device, able to do basic image recognition (probably a bit of best-guess text recognition, combined with map records of what's around, local tags, etc.), could easily include a feature that not only blocks the digital ads you don't want to see, but the physical-world ads, as well. Given how popular ad-blocking widgets are for web browsers, and the fast-forward-over/skip commercial features of TiVos, such a system is almost over-determined once the pieces become available. With the first version of the AR device, this gives you something like...

But remember that this technology now can recognize people (either by face, or by what they carry). What if, instead of just blocking advertisers, I wanted to block out the people who annoyed me? Let's say (to be non-partisan this time), I didn't like anyone who worked in the advertising industry, and I didn't even want to see their faces. That leads to...

Of course, all of those blurry, whited-out spaces can get annoying and distracting, so I'd want to replace them with alternative images. Ads I do want, possibly, or images pulled from my own photo/art stream. For the faces, probably just random recently-seen (but not recognized as "known") faces from other people. It doesn't have to be perfect, it just has to be enough to not interrupt your attention (in fact, you'd want it to be slightly imperfect, so that you don't mistakenly try to read a sign or speak to someone you're actually blocking).

This probably seems a bit fanciful, an excuse to play with Photoshop a bit. But this is something I've been mulling for some time, and feels more likely every time I come back to it. The moment that we can easily display location-aware images on an augmented reality system, we'll have people trying to block images they don't like. Forget ads (or advertisers) -- we'll have people wanting to block even slightly suggestive images, people with beliefs they don't like, anything that would upset the version of reality they've built for themselves.

The flip side of "show me everything I want to know about the world" is "don't show me anything I don't want to know."