Four Futures for the Earth

by Jamais Cascio

Never trust a futurist who only offers one vision of tomorrow.

We don't know what the future will hold, but we can try to tease out what it might. Scenarios, which combine a variety of important and uncertain drivers into a mix of different -- but plausible -- futures, offer a useful methodology for coming up with a diverse set of plausible tomorrows. Scenarios are not predictions, but examples, giving us a wind-tunnel to test out different strategies for managing large, complex problems.

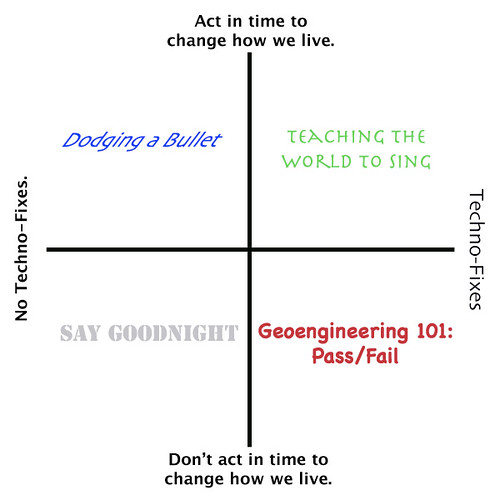

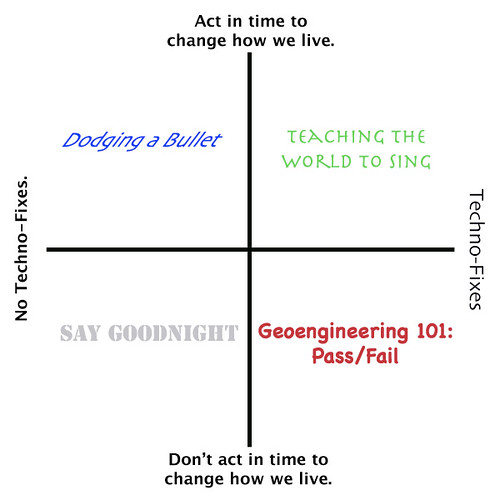

And there really isn't a bigger or more complicated problem right now than the incipient climate disaster. Today, there seems to be two schools of thought regarding the best way to deal with global warming: the "act now" approach, demanding (in essence) that we change our behavior and the ways that our societies are structured, and do it as quickly as possible, or else we're boned; and the "techno-fix" approach, which says (in essence) don't worry, the nano/info/bio revolution that's just around the corner will save us. Generally, the Worldchanging approach is to emphasize the first, with a sprinkle of the second for flavor (and as backup).

The thing is, these are not mutually-exclusive propositions, and success or failure in one doesn't determine the chance of success or failure in the other. It's entirely possible that we will change our behavior/society/world (ahem), and also come up with fantastic new technologies; it's also possible that we'll stumble on both paths, neither fixing things in time nor getting our hands on the tools we could use to repair the worst damage.

To a futurist, a pair of distinct, largely independent variables just begs to be turned into a scenario matrix. So let's give in, and take a brief look a the four scenarios the combinations of these two paths create:

Dodging a Bullet

2037: It's amazing how fast we went from "is this real?" to "what can we do?" to "let's do it now." There was no silver bullet, no green leap forward, just a billion quiet decisions to act. People made better, smarter choices, and the headlong rush to disaster slowed; encouraged by this, we started to focus our investments and social energy into solving this problem, and eventually (but much faster than we'd dared hope!) the growth of atmospheric carbon stopped. There's still too much CO2 in the air, and we know we're going to be dealing with a warming climate for awhile still, but the human species actually managed to choose to avoid killing itself off.

This is a world in which civil society begins to focus on averting climate disaster as its primary, immediate task, even at the cost of some economic growth and general technological acceleration. Most governments and institutions curtail research and development without direct climate benefits, leading to a world of 2037 that's nowhere near as advanced as futurists and technology enthusiasts had expected. A succession of environmental disasters linked (in the public mind, at the very least) to global warming -- killing hundreds of thousands, and leaving tens of millions as refugees -- gave added impetus to a world-wide effort; by 2017, a clear majority of the world's population was willing to do anything necessary to avoid the environmental collapse that many scientists saw as nearly inevitable. One popular slogan for the climate campaign was "we could be the best, or we could be the last."

Teaching the World to Sing

02037: I stumbled across a memory archive from twenty years ago, before the emergence of the Chorus, and was shocked to see the Earth as it was. Oceans near death, climate system lurching towards collapse, overall energy flux just horribly out-of-balance. I can't believe the Earth actually survived that. I had assumed that the Chorus was responsible for repairing the planet, but no -- We told me that, even by 02017, the Earth's human populace was making the kind of substantive changes to how it lived necessary to avoid real disaster, and that 02017 was actually one of the first years of improvement! What the Chorus made possible was the planetary repair, although We says that this project still has many years left, in part because We had to fix some of We's own mistakes from the first few repair attempts. The Chorus actually seemed embarrassed when We told me that!

This is a world in which immediate efforts to make the social and behavioral changes necessary to avoid climate disaster make possible longer-term projects to apply powerful, transformative technologies (such as molecular manufacturing and cognitive augmentation) to the problem of stabilizing and, eventually, repairing the broken environment. It's not quite a Singularity, but is perhaps something nearly as strange: a world that has come to see few differences between human systems and natural/geophysical systems. "We are Gaia, too," the aging (but quite healthy) James Lovelock reminded us in 2023. And Gaia is us: billions of molecular-scale eco-sensors and intelligent simulations give the Earth itself an important voice in the global Chorus.

Geoengineering 101: Pass/Fail

2037: The Hephaestus 2 mission reported last week that it had managed to stabilize the wobble on the Mirror, but JustinNN.tv blurbed me a minute ago that New Tyndall Center is still showing temperature instabilities. According to Tyndall, that clinches it: we have another rogue at work. NATO ended the last one with extreme prejudice (as dramatized in last Summer's blockbuster, "Shutdown" -- I loved that Bruce Willis came out of retirement to play Gates), but this one's more subtle. My eyecrawl has some bluster from the SecGen now, saying that "this will not stand," blah blah blah. I just wish that these boy geniuses (and they're all guys, you ever notice that?) would put half as much time and effort into figuring out the Atlantic Seawall problem as they do these crazy-ass plans to fix the sky.

This is a world in which attempts to make the broad social and behavioral changes necessary to avoid climate disaster are generally too late and too limited, and the global environment starts to show early signs of collapse. The 2010s to early 2020s are characterized by millions of dead from extreme weather events, hundreds of millions of refugees, and a thousand or more coastal cities lost all over the globe. The continued trend of general technological acceleration gets diverted by 2020 into haphazard, massive projects to avert disaster. Few of these succeed -- serious climate problems hit too fast for the more responsible advocates of geoengineering to get beyond the "what if..." stage -- and the many that fail often do so in a spectacular (and legally actionable) fashion. Those that do work serve mainly to keep the Earth poised on the brink: bioengineered plants that consume enough extra CO2 and methane to keep the atmosphere stable; a very slow project to reduce the acidity of the oceans; and the Mirror, a thousands of miles in diameter solar shield at the Lagrange point between the Earth and the Sun, reducing incoming sunlight by 2% -- enough to start a gradual cooling trend.

Say Goodnight

2030-something. Late in the decade, I think. Living day-to-day makes it hard to keep track of the years. The new seasons don't help -- Stormy, Still Stormy, Hellaciously Stormy, and Blast Furnace -- and neither does the constant travel, north to the Nunavut Protectorate, if it's still around. I hear things are even worse in Europe, if you can believe that. I don't hear much about Asia anymore, but I suppose nobody does now. The Greenland icepack went sometime in the last few years, and I hear a rumor that Antarctica is starting to go now. Who knows? I still see occasional aircraft high overhead, but they mostly look like military planes, so don't get your hopes up: they're probably from somebody who thinks it's still worth it to fight over the remaining oil.

This is a world in which we don't adopt the changes we need, and technology-based fixes end up being too hard to implement in sufficient quantity and scale to make a real difference. Competition for the last bit of advantage (in economics, in security, in resources) accelerates the general collapse. Things fall apart; the center does not hold; mere anarchy is loosed upon the world.

Pick your future.

Jamais Cascio co-founded Worldchanging, and wrote over 1,900 articles for the site during his tenure. He now works as a foresight and futures specialist, serving as the Global Futures Strategist for the Center for Responsible Nanotechnology and a Research Affiliate for the Institute for the Future. His current online home is Open the Future.

Biologists at the

Biologists at the  Although the Human Genome Project (and the various plant & animal genome projects that preceded it and continue on) was often hyped as the key to unlocking human biology, it's only the first step in a bigger process. Genes code for proteins. Of far greater utility than a genome map -- and of far greater complexity -- is a map of protein interaction, sometimes called a "proteome." Proteins form the building blocks of tissues, and their interactions are the basis for biological systems. In short, proteins actually carry out the details of being a living being.

Although the Human Genome Project (and the various plant & animal genome projects that preceded it and continue on) was often hyped as the key to unlocking human biology, it's only the first step in a bigger process. Genes code for proteins. Of far greater utility than a genome map -- and of far greater complexity -- is a map of protein interaction, sometimes called a "proteome." Proteins form the building blocks of tissues, and their interactions are the basis for biological systems. In short, proteins actually carry out the details of being a living being. Plastic was to the 1960's what cryonics was to the 1980's -- symbolic of the Future. While freezing one's head after death never really made it to the mainstream, plastics are all around us. With a couple of recent developments, plastic may well again be the wave of the future.

Plastic was to the 1960's what cryonics was to the 1980's -- symbolic of the Future. While freezing one's head after death never really made it to the mainstream, plastics are all around us. With a couple of recent developments, plastic may well again be the wave of the future. At 25 light years away, Vega is one of the closer stars in the night sky, and one of the brightest. British astronomers, working at the James Clerk Maxwell telescope in Hawaii,

At 25 light years away, Vega is one of the closer stars in the night sky, and one of the brightest. British astronomers, working at the James Clerk Maxwell telescope in Hawaii,  If you're even an occasional visitor to Blogistan over the past few days there's no way you could have avoided the abundance of celebratory links about the successful landing of the Mars probe "Spirit" (although I prefer the more dignified official name, "Mars Exploration Rover-A"). Here at WorldChanging, we're certainly ready to do our part to welcome our glorious new Martian overlords. Here are some interesting Mars-related links you may not already have encountered:

If you're even an occasional visitor to Blogistan over the past few days there's no way you could have avoided the abundance of celebratory links about the successful landing of the Mars probe "Spirit" (although I prefer the more dignified official name, "Mars Exploration Rover-A"). Here at WorldChanging, we're certainly ready to do our part to welcome our glorious new Martian overlords. Here are some interesting Mars-related links you may not already have encountered: If you've read Neal Stephenson's brilliant novel The Diamond Age, you will certainly remember his description of "toner wars" -- clouds of carbon-based nanoparticles fighting it out as tools of economic or political dominance. Breathing in the microscopic machines wasn't good for you, but that was related to the various nasty things that the overly-aggressive nanoassemblers might do once in your system. In reality, the danger from such a threat would may have more to do simply with how small they are.

If you've read Neal Stephenson's brilliant novel The Diamond Age, you will certainly remember his description of "toner wars" -- clouds of carbon-based nanoparticles fighting it out as tools of economic or political dominance. Breathing in the microscopic machines wasn't good for you, but that was related to the various nasty things that the overly-aggressive nanoassemblers might do once in your system. In reality, the danger from such a threat would may have more to do simply with how small they are. I

I  In a bit of serendipity, several items about the future of power generation popped up on my radar recently. They nicely demonstrate alternative sources of electricity now, in the near future, and a bit down the road. Quick synopsis: the days of massive generators like the one shown to the right are numbered.

In a bit of serendipity, several items about the future of power generation popped up on my radar recently. They nicely demonstrate alternative sources of electricity now, in the near future, and a bit down the road. Quick synopsis: the days of massive generators like the one shown to the right are numbered. When I was in London earlier this month, I visited the British Museum. The pieces of ancient civilization and the various plunderings of empire were interesting, but what I really wanted to see was the Rosetta Stone (that's my picture of it at right). The Rosetta Stone, found by Napoleon's troops in Egypt in 1799 and transferred to British control in 1802 as a spoil of war, was a largish piece of basalt covered with an official pronouncement about Pharaoh Ptolemy, written in ancient Greek, demotic, and ancient Egyptian hieroglyphics. That dark gray slab embodies a fascinating mix of anthropology, archaeology, and cryptography. Prior to the discovery of the Rosetta Stone, hieroglyphics were considered indecipherable pictograms; after the Rosetta Stone, hieroglyphics were a window into the workings of ancient Egypt. It's entirely possible that, had the Rosetta Stone never been found, the meaning of hieroglyphics would have been lost forever. (Simon Singh's fascinating text on cryptography, The Code Book, has a good chapter on how the Stone led to figuring out hieroglyphics.)

When I was in London earlier this month, I visited the British Museum. The pieces of ancient civilization and the various plunderings of empire were interesting, but what I really wanted to see was the Rosetta Stone (that's my picture of it at right). The Rosetta Stone, found by Napoleon's troops in Egypt in 1799 and transferred to British control in 1802 as a spoil of war, was a largish piece of basalt covered with an official pronouncement about Pharaoh Ptolemy, written in ancient Greek, demotic, and ancient Egyptian hieroglyphics. That dark gray slab embodies a fascinating mix of anthropology, archaeology, and cryptography. Prior to the discovery of the Rosetta Stone, hieroglyphics were considered indecipherable pictograms; after the Rosetta Stone, hieroglyphics were a window into the workings of ancient Egypt. It's entirely possible that, had the Rosetta Stone never been found, the meaning of hieroglyphics would have been lost forever. (Simon Singh's fascinating text on cryptography, The Code Book, has a good chapter on how the Stone led to figuring out hieroglyphics.) The disk is physically etched with words in 1,000 languages, requiring a high-power optical microscope to read. This is a more survivable format than digital media; there's no risk that the particular reader technology will be lost to obsolescence or market whims. The disk contains

The disk is physically etched with words in 1,000 languages, requiring a high-power optical microscope to read. This is a more survivable format than digital media; there's no risk that the particular reader technology will be lost to obsolescence or market whims. The disk contains  Starting from the premise that "lots of copies keeps stuff safe," the disk will be mass-produced and globally distributed. Actually, very shortly it will be extraterrestrially distributed, as well. A copy of the Rosetta Project disk has been fitted to the ESA's

Starting from the premise that "lots of copies keeps stuff safe," the disk will be mass-produced and globally distributed. Actually, very shortly it will be extraterrestrially distributed, as well. A copy of the Rosetta Project disk has been fitted to the ESA's  It's one of those assertions that a reasonable person might immediately dismiss -- sound waves can make bubbles in liquid blow up in such a way that they produce temperatures and pressures equivalent to the inside of the sun. But sonoluminescence is a well-known phenomenon (

It's one of those assertions that a reasonable person might immediately dismiss -- sound waves can make bubbles in liquid blow up in such a way that they produce temperatures and pressures equivalent to the inside of the sun. But sonoluminescence is a well-known phenomenon ( Continuing with my space-themed weekend, I want to give a warm WorldChanging welcome to

Continuing with my space-themed weekend, I want to give a warm WorldChanging welcome to

No, it's not a new sport, it's the

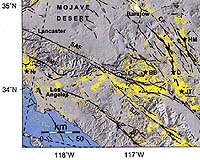

No, it's not a new sport, it's the  Having lived my whole life in California, I've never been particularly frightened of earthquakes. Appropriately concerned, of course, and certainly alarmed during one, but not terrified. This is undoubtedly due to the bolt-from-the-blue nature of quakes; you don't know when the big one is going to hit, so there's no use worrying about it -- just stock up on water, canned food, and blankets, and be ready to deal with it when it happens. Which could be today... or a century from now.

Having lived my whole life in California, I've never been particularly frightened of earthquakes. Appropriately concerned, of course, and certainly alarmed during one, but not terrified. This is undoubtedly due to the bolt-from-the-blue nature of quakes; you don't know when the big one is going to hit, so there's no use worrying about it -- just stock up on water, canned food, and blankets, and be ready to deal with it when it happens. Which could be today... or a century from now. One of the weirder elements of quantum physics (and that's saying something) is particle "entanglement:" two particles (usually photons or electrons, but research suggests larger particles may be entangled, too) are linked in a way that means that any disturbance done to one affects the other, instantly, no matter how far away it is. Einstein called this "spooky action at a distance," and we're now starting to see the possibility of practical applications for it. New Scientist reports that quantum entanglement is the

One of the weirder elements of quantum physics (and that's saying something) is particle "entanglement:" two particles (usually photons or electrons, but research suggests larger particles may be entangled, too) are linked in a way that means that any disturbance done to one affects the other, instantly, no matter how far away it is. Einstein called this "spooky action at a distance," and we're now starting to see the possibility of practical applications for it. New Scientist reports that quantum entanglement is the  3D printing, also known as 3D fabrication, "fabbing," and "stereolithography," is high on my list of potentially ground-shaking technologies -- emphasis on the "potentially." It's been around in various forms for awhile now, but the steady pace of improvement hasn't quite matched the intensity of the excitement around the concept in certain circles. Nonetheless, since the systems do continue to get faster, cheaper, and more precise, a bit of excitement is warranted.

3D printing, also known as 3D fabrication, "fabbing," and "stereolithography," is high on my list of potentially ground-shaking technologies -- emphasis on the "potentially." It's been around in various forms for awhile now, but the steady pace of improvement hasn't quite matched the intensity of the excitement around the concept in certain circles. Nonetheless, since the systems do continue to get faster, cheaper, and more precise, a bit of excitement is warranted. How does a spider stick to the ceiling?

How does a spider stick to the ceiling?  Oh,

Oh,  What's it like to spend three months on another world? Ask the Mars rovers. NASA has created movies of the activities of both

What's it like to spend three months on another world? Ask the Mars rovers. NASA has created movies of the activities of both

I've

I've  Evolution is a pretty amazing process. The combination of internal change (mutation) and environmental pressure (fitness) can have pretty dramatic results, given enough time. And when you do it in a computer, "enough time" can be surprisingly brief.

Evolution is a pretty amazing process. The combination of internal change (mutation) and environmental pressure (fitness) can have pretty dramatic results, given enough time. And when you do it in a computer, "enough time" can be surprisingly brief. Tomorrow, at 7:36pm PDT (10:36pm EDT), the

Tomorrow, at 7:36pm PDT (10:36pm EDT), the  I've been pretty busy lately, but the WC suggestions box still keeps pinging me and my RSS feeds keep pointing me towards new and interesting stuff. Rather than continue to let them pile up, I'm going to do a few QuickChange-style entries collected by category.

I've been pretty busy lately, but the WC suggestions box still keeps pinging me and my RSS feeds keep pointing me towards new and interesting stuff. Rather than continue to let them pile up, I'm going to do a few QuickChange-style entries collected by category. Jon

Jon  Cancer haunts us: its ability to manifest in seemingly-healthy tissue without any evident provocation; the speed with which it can hit -- and the years over which it can linger; the knowledge that, whatever the trigger, it is ultimately the body turning against itself, going mad at a cellular level. While other diseases have emerged with equally (or more) devastating consequences for their victims, cancer holds a deeply-rooted place in the human imagination. A "cure for cancer" stands alongside "living in space" and "thinking machines" as key symbols for many of what The Future will hold.

Cancer haunts us: its ability to manifest in seemingly-healthy tissue without any evident provocation; the speed with which it can hit -- and the years over which it can linger; the knowledge that, whatever the trigger, it is ultimately the body turning against itself, going mad at a cellular level. While other diseases have emerged with equally (or more) devastating consequences for their victims, cancer holds a deeply-rooted place in the human imagination. A "cure for cancer" stands alongside "living in space" and "thinking machines" as key symbols for many of what The Future will hold.

Japan's

Japan's  Okay, so I really don't know what I'd do with one, but this Bluetooth-controlled

Okay, so I really don't know what I'd do with one, but this Bluetooth-controlled  The first million base pairs of the human genome took four years to decode; the second million base pairs took four months. The rate of improvement in the computational ability to sequence DNA base pairs is progressing at a rate comparable to -- and occasionally faster than -- the famous "Moore's Law" doubling curve, a testament to both improvements in processor capability and improvements in process. Within the next ten years, possibly by the end of this decade, biologists will be able to sequence fully a given DNA sample in a matter of minutes. In other words, it will soon be possible to have not just a human genome sequence, but your human genome sequence. Among the many questions which will result will be who owns the DNA, and who will own the process of telling your what your DNA holds?

The first million base pairs of the human genome took four years to decode; the second million base pairs took four months. The rate of improvement in the computational ability to sequence DNA base pairs is progressing at a rate comparable to -- and occasionally faster than -- the famous "Moore's Law" doubling curve, a testament to both improvements in processor capability and improvements in process. Within the next ten years, possibly by the end of this decade, biologists will be able to sequence fully a given DNA sample in a matter of minutes. In other words, it will soon be possible to have not just a human genome sequence, but your human genome sequence. Among the many questions which will result will be who owns the DNA, and who will own the process of telling your what your DNA holds? We've talked about

We've talked about  We've

We've  Sometime after 2010, the European Space Agency will be launching the

Sometime after 2010, the European Space Agency will be launching the  Who would ever think that building something to function deep in Antarctica in the middle of winter would be the cheap option?

Who would ever think that building something to function deep in Antarctica in the middle of winter would be the cheap option? PhysOrg yesterday had two nanotechnology-related reports of particular interest to WorldChangers.

PhysOrg yesterday had two nanotechnology-related reports of particular interest to WorldChangers. The European Space Agency's

The European Space Agency's  It's a recurring element of near-future worlds: the phone (or video screen or implanted chip) that automagically translates spoken phrases to or from your own language, allowing you to converse freely with anyone. No longer shackled to a tour group or three months of an intensive language class, one could travel the world with ease, confident of the ability to communicate with the locals. I suspect this fantasy of a translation device is more prevalent among those of us in cultures less prone to learning multiple languages, but even multilingual global nomads would find occasional technical assistance useful. NEC's pocket spoken-word translation device, as

It's a recurring element of near-future worlds: the phone (or video screen or implanted chip) that automagically translates spoken phrases to or from your own language, allowing you to converse freely with anyone. No longer shackled to a tour group or three months of an intensive language class, one could travel the world with ease, confident of the ability to communicate with the locals. I suspect this fantasy of a translation device is more prevalent among those of us in cultures less prone to learning multiple languages, but even multilingual global nomads would find occasional technical assistance useful. NEC's pocket spoken-word translation device, as  "There are more things in Heaven and Earth, Horatio, than are dreamt of in your philosophies..."

"There are more things in Heaven and Earth, Horatio, than are dreamt of in your philosophies..." Perhaps the most widely-accepted vision of what a greener future will look like is that of the "

Perhaps the most widely-accepted vision of what a greener future will look like is that of the " What if you could create life in a test tube?

What if you could create life in a test tube? One of the classic tropes of 80s cyberpunk is "jacking in" -- connecting one's neural interface from a hardware-augmented brain to the computer networks at large. The neural interface was one of those science fiction technologies that made for good stories, but as a real-world development, it raised all sorts of questions. Who'd want to go through the surgery for that? What about upgrading when better technology came out? And who's going to beta test the thing?!?

One of the classic tropes of 80s cyberpunk is "jacking in" -- connecting one's neural interface from a hardware-augmented brain to the computer networks at large. The neural interface was one of those science fiction technologies that made for good stories, but as a real-world development, it raised all sorts of questions. Who'd want to go through the surgery for that? What about upgrading when better technology came out? And who's going to beta test the thing?!? Two tangentially related space items today -- one about Mars, the other Saturn, both from the ESA.

Two tangentially related space items today -- one about Mars, the other Saturn, both from the ESA. The December 26 tsunami was a deadly reminder that even exceedingly rare natural events can happen, and can have devastating results. The tragedy was compounded by the fact that warnings could have been issued and responded to, but the systems to do so weren't available. But around the same time, we came very close to having a second reminder, one which could have led to an even more terrible result.

The December 26 tsunami was a deadly reminder that even exceedingly rare natural events can happen, and can have devastating results. The tragedy was compounded by the fact that warnings could have been issued and responded to, but the systems to do so weren't available. But around the same time, we came very close to having a second reminder, one which could have led to an even more terrible result. As far as lizards go,

As far as lizards go,  A quantum dot may be tiny, but this development has the potential to be quite big.

A quantum dot may be tiny, but this development has the potential to be quite big. Reports that British researchers are claiming that cutting back the use of fossil fuels will make global warming worse are popping up on the web today, and many strike the headline and text pose of

Reports that British researchers are claiming that cutting back the use of fossil fuels will make global warming worse are popping up on the web today, and many strike the headline and text pose of  We pay attention to developments in polymer electronics for a couple of reasons: they can be flexible, meaning that they can have

We pay attention to developments in polymer electronics for a couple of reasons: they can be flexible, meaning that they can have  As harbingers of the future go, this one has it all: self-assembly, biomimicry, cybernetic integration of biology and machine, and revolutionary potential for both medical applications and swarm robotics. It's very much the kind of scientific report that makes one feel like this is, in fact, the 21st century. As with many such breakthroughs, this one will take some time to play out, but even this early stage is pretty amazing.

As harbingers of the future go, this one has it all: self-assembly, biomimicry, cybernetic integration of biology and machine, and revolutionary potential for both medical applications and swarm robotics. It's very much the kind of scientific report that makes one feel like this is, in fact, the 21st century. As with many such breakthroughs, this one will take some time to play out, but even this early stage is pretty amazing. You'll never look at your ink-jet printer the same way again.

You'll never look at your ink-jet printer the same way again. It's become accepted wisdom that Moore's Law -- the pace at which transistor density increases (or, roughly, the pace at which computers keep getting faster) -- will run into a fabrication wall fairly soon. Etching chips already requires the use of high-energy lithographic techniques, and quantum effects at the smaller and smaller distance between components is becoming harder to deal with. For some pundits, this means the end of the steady growth of computer improvements. They're right, but not in the way they expect. The imminent demise of silicon transistors has led researchers down new pathways with far greater potential than mere doubling every 18 months.

It's become accepted wisdom that Moore's Law -- the pace at which transistor density increases (or, roughly, the pace at which computers keep getting faster) -- will run into a fabrication wall fairly soon. Etching chips already requires the use of high-energy lithographic techniques, and quantum effects at the smaller and smaller distance between components is becoming harder to deal with. For some pundits, this means the end of the steady growth of computer improvements. They're right, but not in the way they expect. The imminent demise of silicon transistors has led researchers down new pathways with far greater potential than mere doubling every 18 months. I had noted the announcement of the

I had noted the announcement of the

Is there life on Mars?

Is there life on Mars?

Last month, the Massachusetts Institute of Technology inaugurated its

Last month, the Massachusetts Institute of Technology inaugurated its  This month's Technology Review has a fascinating set of stories about how technological development and problems are viewed in seven different countries:

This month's Technology Review has a fascinating set of stories about how technological development and problems are viewed in seven different countries:  Just in time for

Just in time for  The current issue of Nature includes a report by

The current issue of Nature includes a report by  It's entirely possible that one of the most important technological innovations of the 20th century will turn out to have been the lowly ink-jet printer. As it happens, the technology that makes it possible to squirt minute quantities of ink in precise patterns onto a sheet of paper is perfect for spraying out other materials (such as resin, plastic and even

It's entirely possible that one of the most important technological innovations of the 20th century will turn out to have been the lowly ink-jet printer. As it happens, the technology that makes it possible to squirt minute quantities of ink in precise patterns onto a sheet of paper is perfect for spraying out other materials (such as resin, plastic and even  This is

This is  While much attention is (rightfully) given to the dilemmas surrounding the provision of AIDS drugs in the developing world, medication is not the only expense incurred in the fight against HIV. Accurate diagnosis and monitoring of AIDS can be costly, and is often only available in large urban hospitals. As individuals can have varying reactions to treatment, accurate measurement of T cells is critical for determining whether an intervention is successful. The cost of technologies to perform those counts -- called "flow cytometry" -- can be a barrier to effective treatment.

While much attention is (rightfully) given to the dilemmas surrounding the provision of AIDS drugs in the developing world, medication is not the only expense incurred in the fight against HIV. Accurate diagnosis and monitoring of AIDS can be costly, and is often only available in large urban hospitals. As individuals can have varying reactions to treatment, accurate measurement of T cells is critical for determining whether an intervention is successful. The cost of technologies to perform those counts -- called "flow cytometry" -- can be a barrier to effective treatment. Most of us who grew up in the US (and quite probably many outside the US, as well) know of the "

Most of us who grew up in the US (and quite probably many outside the US, as well) know of the " With the right mixture, water and salt can work wonders.

With the right mixture, water and salt can work wonders.

Books existed well before the invention of the printing press, but they were individual, painstakingly-crafted affairs. The printing press meant that books could be assembled both more easily -- itself a revolutionary development -- and more consistently. Mass production, as we conceive of it today, has its roots in the printing press. Now that concept is set to be applied to the nanoworld.

Books existed well before the invention of the printing press, but they were individual, painstakingly-crafted affairs. The printing press meant that books could be assembled both more easily -- itself a revolutionary development -- and more consistently. Mass production, as we conceive of it today, has its roots in the printing press. Now that concept is set to be applied to the nanoworld. Looks like

Looks like  Bacteria that can

Bacteria that can  A variety of stories about ideas and technologies for energy generation have popped up recently, and all seem worth paying a bit of attention to. Rather than QuickChange them one at a time, I'm going to mix them all together here and see what results.

A variety of stories about ideas and technologies for energy generation have popped up recently, and all seem worth paying a bit of attention to. Rather than QuickChange them one at a time, I'm going to mix them all together here and see what results. If you've read the more esoteric nanotechnology treatises, you're undoubtedly familiar with the concept of "programmable matter" -- micro- or nano-scale devices which can combine to form an amazing assortment of physical objects, reassembling into something entirely different as needed. This vision of nanotechnology is light years away from today's world of carbon nanotubes or even the practical-but-amazing world of nanofactories. It shouldn't surprise you, however, to note that -- despite its fantastical elements -- serious research is already underway heading down the path to programmable matter.

If you've read the more esoteric nanotechnology treatises, you're undoubtedly familiar with the concept of "programmable matter" -- micro- or nano-scale devices which can combine to form an amazing assortment of physical objects, reassembling into something entirely different as needed. This vision of nanotechnology is light years away from today's world of carbon nanotubes or even the practical-but-amazing world of nanofactories. It shouldn't surprise you, however, to note that -- despite its fantastical elements -- serious research is already underway heading down the path to programmable matter. We've

We've  Nanoscientists and biochemical engineers are starting to play with DNA not as replicating code, but as physical tools for nanoassembly. Researchers at Arizona State University and at New York University have independently come with ways to use DNA as a framework upon which to build sophisticated molecules. The work has implications for biochemical sensors, drug analysis, even DNA computing. And while the practical applications of these specific techniques are interesting, their larger importance is as a demonstration of the increasing sophistication of our ability to work at the nanoscale.

Nanoscientists and biochemical engineers are starting to play with DNA not as replicating code, but as physical tools for nanoassembly. Researchers at Arizona State University and at New York University have independently come with ways to use DNA as a framework upon which to build sophisticated molecules. The work has implications for biochemical sensors, drug analysis, even DNA computing. And while the practical applications of these specific techniques are interesting, their larger importance is as a demonstration of the increasing sophistication of our ability to work at the nanoscale. It may be awhile before it shows up on your desktop, but the Pacific Northwest National Laboratory has just

It may be awhile before it shows up on your desktop, but the Pacific Northwest National Laboratory has just  Geobacter is quite the interesting genus of bacteria. As extremophiles, they can live quite happily under conditions too toxic for most creatures big or small. Moreover, many Geobacter microbes are able to convert those toxins into materials far less dangerous -- a process referred to as "

Geobacter is quite the interesting genus of bacteria. As extremophiles, they can live quite happily under conditions too toxic for most creatures big or small. Moreover, many Geobacter microbes are able to convert those toxins into materials far less dangerous -- a process referred to as " This is one of the first pictures of what the inside of a comet looks like.

This is one of the first pictures of what the inside of a comet looks like. It's generally understood how genetic information is spread: from an organism to its offspring. For this reason, the traditional representation of evolution and the relationship between organisms is portrayed as a "tree," with an increasing number of branches emanating from the origin. But it turns out that this well-understood structure doesn't apply to microbes.

It's generally understood how genetic information is spread: from an organism to its offspring. For this reason, the traditional representation of evolution and the relationship between organisms is portrayed as a "tree," with an increasing number of branches emanating from the origin. But it turns out that this well-understood structure doesn't apply to microbes.

It's often tempting to think about the microchip revolution in terms of computers and communication devices, bits of machinery with functions obviously derived from their digital components. But microprocessors have had some of their most worldchanging effects in the realm of biomedicine, and not just in terms of faster computer sequencing of DNA. Two more examples of this have come up this week: one to monitor the progress of HIV infections, and the other to keep watch for pathogens of all types. Both will see the greatest use -- and greatest impact -- in the developing world.

It's often tempting to think about the microchip revolution in terms of computers and communication devices, bits of machinery with functions obviously derived from their digital components. But microprocessors have had some of their most worldchanging effects in the realm of biomedicine, and not just in terms of faster computer sequencing of DNA. Two more examples of this have come up this week: one to monitor the progress of HIV infections, and the other to keep watch for pathogens of all types. Both will see the greatest use -- and greatest impact -- in the developing world. Researchers at UC Berkeley have developed a method of

Researchers at UC Berkeley have developed a method of  Broadly put, there are two approaches to fighting cancers: chemotherapy, which is highly toxic to both cancerous and healthy cells; and anti-angiogenesis, which attacks cancers by cutting off their blood supply. Both have drawbacks -- chemo can kill much more than cancer cells (and against which cancer can develop resistance), anti-angiogenesis can trigger cancer survival responses such as metastasis -- and while they are in principle complementary, the very blood vessels cut off by anti-angiogenesis are those needed to apply the iterative rounds of chemotherapy.

Broadly put, there are two approaches to fighting cancers: chemotherapy, which is highly toxic to both cancerous and healthy cells; and anti-angiogenesis, which attacks cancers by cutting off their blood supply. Both have drawbacks -- chemo can kill much more than cancer cells (and against which cancer can develop resistance), anti-angiogenesis can trigger cancer survival responses such as metastasis -- and while they are in principle complementary, the very blood vessels cut off by anti-angiogenesis are those needed to apply the iterative rounds of chemotherapy. In March of 2004, we offered a hearty "

In March of 2004, we offered a hearty " Okay, enough.

Okay, enough.

We know that when animals undergo stress biochemical changes result, some of which may be situationally useful (such as increased alertness), and some of which may be deleterious if too frequent or too persistent (such as increased blood pressure). It turns out that plants have a biochemical reaction to stress, as well. Stress, for plants, tends to mean environmental conditions outside the range for which they evolved -- too hot, too cold, insufficient sunlight or moisture or CO2, etc.. A typical plant response to such conditions is to shut down its own metabolism, to stop growing and to stop producing seeds and pollen.

We know that when animals undergo stress biochemical changes result, some of which may be situationally useful (such as increased alertness), and some of which may be deleterious if too frequent or too persistent (such as increased blood pressure). It turns out that plants have a biochemical reaction to stress, as well. Stress, for plants, tends to mean environmental conditions outside the range for which they evolved -- too hot, too cold, insufficient sunlight or moisture or CO2, etc.. A typical plant response to such conditions is to shut down its own metabolism, to stop growing and to stop producing seeds and pollen.

Inexpensive, easily-made medical sensors and disposable testing kits need inexpensive, easily-made power sources, but until now, these have been difficult to come by. "

Inexpensive, easily-made medical sensors and disposable testing kits need inexpensive, easily-made power sources, but until now, these have been difficult to come by. " In January, we

In January, we  This is likely the biggest technological breakthrough of the year, arguably even of the decade.

This is likely the biggest technological breakthrough of the year, arguably even of the decade. One of the reasons that many people with solid scientific backgrounds have concerns about the use of genetic modification techniques in agriculture is the degree to which the potential for unintended consequences seems to be downplayed. This is especially true when microbial genetic material is involved. Unlike more complex organisms, bacteria can spread changes to their genomes through methods other than traditional reproduction; "

One of the reasons that many people with solid scientific backgrounds have concerns about the use of genetic modification techniques in agriculture is the degree to which the potential for unintended consequences seems to be downplayed. This is especially true when microbial genetic material is involved. Unlike more complex organisms, bacteria can spread changes to their genomes through methods other than traditional reproduction; " "Nanotechnology" gets a great deal of attention these days, including here at WorldChanging, and for good reason. The ability to create materials and operate machines that have useful properties at the nano-scale (about a billionth of a meter, or roughly the size of molecules) has the potential for dramatic changes in realms as diverse as

"Nanotechnology" gets a great deal of attention these days, including here at WorldChanging, and for good reason. The ability to create materials and operate machines that have useful properties at the nano-scale (about a billionth of a meter, or roughly the size of molecules) has the potential for dramatic changes in realms as diverse as  The question of how to respond to warnings that a Near Earth Object (NEO) was on an eventual collision course with our home planet is a minor recurring theme here at WorldChanging. In a way, it's one of clearer issues that we grapple with -- there are no questions of human culpability or poor planning decisions to make the problem more complex. The reality is that our planetary neighborhood is pretty dangerous, and that one day, one of the thousands upon thousands of asteroids and comets swirling about our solar system is going to have Earth's name on it.

The question of how to respond to warnings that a Near Earth Object (NEO) was on an eventual collision course with our home planet is a minor recurring theme here at WorldChanging. In a way, it's one of clearer issues that we grapple with -- there are no questions of human culpability or poor planning decisions to make the problem more complex. The reality is that our planetary neighborhood is pretty dangerous, and that one day, one of the thousands upon thousands of asteroids and comets swirling about our solar system is going to have Earth's name on it. Researchers at North Carolina State University have

Researchers at North Carolina State University have  Sometimes, the universe has excellent timing. It's

Sometimes, the universe has excellent timing. It's  When I first saw the link to this story, I thought it was yet another

When I first saw the link to this story, I thought it was yet another  Please note that this article has been updated from its original text, correcting a couple of mistakes. -- Jamais

Please note that this article has been updated from its original text, correcting a couple of mistakes. -- Jamais Bioremediation is the process of using living organisms -- typically plants or microbes -- to remove toxic material from the environment. Previous examples we've noted include

Bioremediation is the process of using living organisms -- typically plants or microbes -- to remove toxic material from the environment. Previous examples we've noted include  Our future will be built with ink-jet printers. Not only can we print out

Our future will be built with ink-jet printers. Not only can we print out  Here's another indication that the ink-jet future is upon us (and it's likely to be a more palatable example than the

Here's another indication that the ink-jet future is upon us (and it's likely to be a more palatable example than the

It's fascinating to watch the future emerge, piece by piece. Organic polymer electronic materials are very appealing, from a WorldChanging perspective: they

It's fascinating to watch the future emerge, piece by piece. Organic polymer electronic materials are very appealing, from a WorldChanging perspective: they

It turns out that a little bit of math can both

It turns out that a little bit of math can both  Researchers at Arizona State University have come up with a truly ingenious way to make large amounts of usable antigens for the creation of vaccines --

Researchers at Arizona State University have come up with a truly ingenious way to make large amounts of usable antigens for the creation of vaccines --  Could the future of energy be found in a "star in a jar?"

Could the future of energy be found in a "star in a jar?" Can we avoid climate disaster simply by cutting back radically on the emission of greenhouse gases? Possibly not, and therein lies a problem. Because of the

Can we avoid climate disaster simply by cutting back radically on the emission of greenhouse gases? Possibly not, and therein lies a problem. Because of the  Opponents of animal testing for medical research often argue that the same tests could be performed via computer simulation; researchers counter that simulations simplify physiology too much to be useful in that way. But such a claim may be in its final era -- we now have the first functional, down-to-the-atom simulation of a biological organism. Computational biologists at the University of Illinois at Urbana-Champaign and crystallographers at the University of California at Irvine have created a

Opponents of animal testing for medical research often argue that the same tests could be performed via computer simulation; researchers counter that simulations simplify physiology too much to be useful in that way. But such a claim may be in its final era -- we now have the first functional, down-to-the-atom simulation of a biological organism. Computational biologists at the University of Illinois at Urbana-Champaign and crystallographers at the University of California at Irvine have created a